This bad boy arrived last week. The Gigabyte GeForce GTX 750 Ti Black Edition.

Itís Gigabyteís latest release, a graphics card with the NVIDIA GeForce GTX 750Ti GPU. Itís positioned, logically, between the lower end GTX 750 GPU and the higher end 760 series. It has two DVI outputs and two HDMI outputs, capable of realising the GPUís full capability to run four displays. 4K is supported via both HDMI outputs running at once into a supported display. Notably and disappointingly, there is no DisplayPort or Mini DisplayPort connectivity, which has been the norm for GPUs from competitor ATI - and there are some variants of the 750Ti on the market with DP support. One DVI port is capable of Dual-Link, permitting higher resolutions as required by 27" displays or 120Hz+ gaming displays. Both HDMI sockets are 1.4 capable - we have been unable to test the higher resolution capability but we expect it to work.

The dual-link port also supports VGA; if you wish to use a dual-link display along with an analog output, youíre out of luck. The not-so-bad news gets worse for customers who wish to use NVIDIAís GSYNC technology to eliminate tearing and provide a smoother lag-free display experience (with supported displays) - this requires DisplayPort, which is absent from this product.

As far numbers go, Gigabyte tell us that this is their highest performing 750Ti, clocked at a 1163MHz base with a 1242MHz boost, compared to 1033MHz/1111MHz of their "Overclocked" model. It has 2GB memory, the norm for 750 Ti cards and ups the 1GB specification of the standard 750 series GPUs. It sports their Black Edition moniker including a certificate in the box to drive the point home that this has passed "server grade" 168 hour burn-in tests at Gigabyteís factories prior to being packaged up and send out.

Gigabyte also boldly state that this product features Ultra Durable 2 technology, which tells us that the components used on the card are of high quality and are chosen due to their ability to provide cleaner power to the card, produce less heat and longer life.

Cooling is provided by an enhanced version of Gigabyteís WindForce "anti-turbulence parallel inclined" cooling system. The Black Edition includes a metal housing for the fans instead of the plastic used on some of their other models. This GPU is designed to take up two slots.

\

This is also the most expensive 750Ti series GPU on Scorptecís website, even edging out the faster-on-paper and DisplayPort-equipped EVGA models. It should be noted that the EVGA cards wonít drive 4K displays using dual HDMI ports, however.

Power Consumption and TDP; The Maxwell future for NVIDIA looks bright.

The 750Ti GPU is a great advancement in power consumption and efficiency. Based on NVIDIAís latest "Maxwell" architecture, it includes developments even newer and more advanced than their performance leading 780 Ti and Titan series. This GPU has a Thermal Design Power (TDP) that sits at around 68W - and thatís very important. This card uses around half the amount of power (and conversely, produces half the amount of heat) as the outgoing 650 Ti model and runs circles around it in terms of performance.

It means less heat, which can lead to more creative system builds. Itís less power consumption, making it compatible with the majority of existing power supplies without having to upgrade that component, too. And given the low TDP, the easily removable heatsink and fan assembly could be removed and replaced with a custom passively cooled design, useful for ultra quiet system builds in HTPC or sound sensitive environments such as recording studios.

Itís also friendlier on the environment, if thatís your thing.

The ability for the 750 Ti GPU to be run from the motherboardís power supply is handy and some incarnations of the 750 Ti donít even include the 6 pin connector, which is mandatory if you want to perform overclocking or unlock the power limiters on the GPU. Itía also a good idea to use it - included in the box is a twin Molex to 6 pin adapter. Earlier this year, we encountered a situation where a motherboard wouldnít output the full 75W on the PCI-E slot, likely due to a latent fault with the motherboard or even a design flaw.

The Black Edition, however, requires the 6 pin PCI-E connector to be connected or else the cardís BIOS will prevent the system from booting.

An unusual competitor - the AMD Radeon R9 280XÖ

There has been a rise in computational power to create cryptocurrencies - a process known as "mining". AMDís ATI Radeon products have long been a market leader in this space. Bitcoin was the first, the algorithms perfectly suited to GPU based processing as this was far more efficient and faster than CPU-based instruction sets. As custom-designed ASIC processors became available, attention turned to the scrypt-based Litecon and derivatives. These became popular really quickly and we saw people as soon as six months ago rushing out and building "mining rigs" - PCs or even custom enclosures running as many Radeon R9 series graphics cards as they could fit, along with multiple high performance PSUs. Some PC retailers would place limits on the amount of ATI GPUs one could buy in order to keep some for gaming systems they were building - it wasnít uncommon to witness someone walking in to a PC retailer and asking for between 4 and 8 R9 280X graphics cards. The theory was that theyíll make their money back in mere months.

Unfortunately, as more of these crypto currency coins are found, the harder it is, computationally, to find more. They call this the "difficulty". One year ago, it made financial sense to make your money back in a few weeks on an R9 280X. Today, itís a bottomless pit - the power draw compared with the outlay of a GPU is not worth the return. ASIC processors were released to process scrypt far more efficiently than any GPU and the mining community bailed - flooding the market with tens of thousands of the R9 280X graphics cards. As we all know that when supply outstrips demand, prices drop.

The end result is that the 750 TIís competition isnít the R9 260 of 270 series - itís the secondhand R9 280X, which runs in at over twice to the performance than a 750Ti for around the same price, albeit with a significant increase in power usage, 250W vs the 750Tiís 68W. This is a problem for NVIDIA to overcome here.

And then thereís the ATI driver software, which has a long standing reputation for stability issues. And thereís growing concerns about buying a card used for mining which has been driven 100% of the time and has expected limited life on the card.

Installation

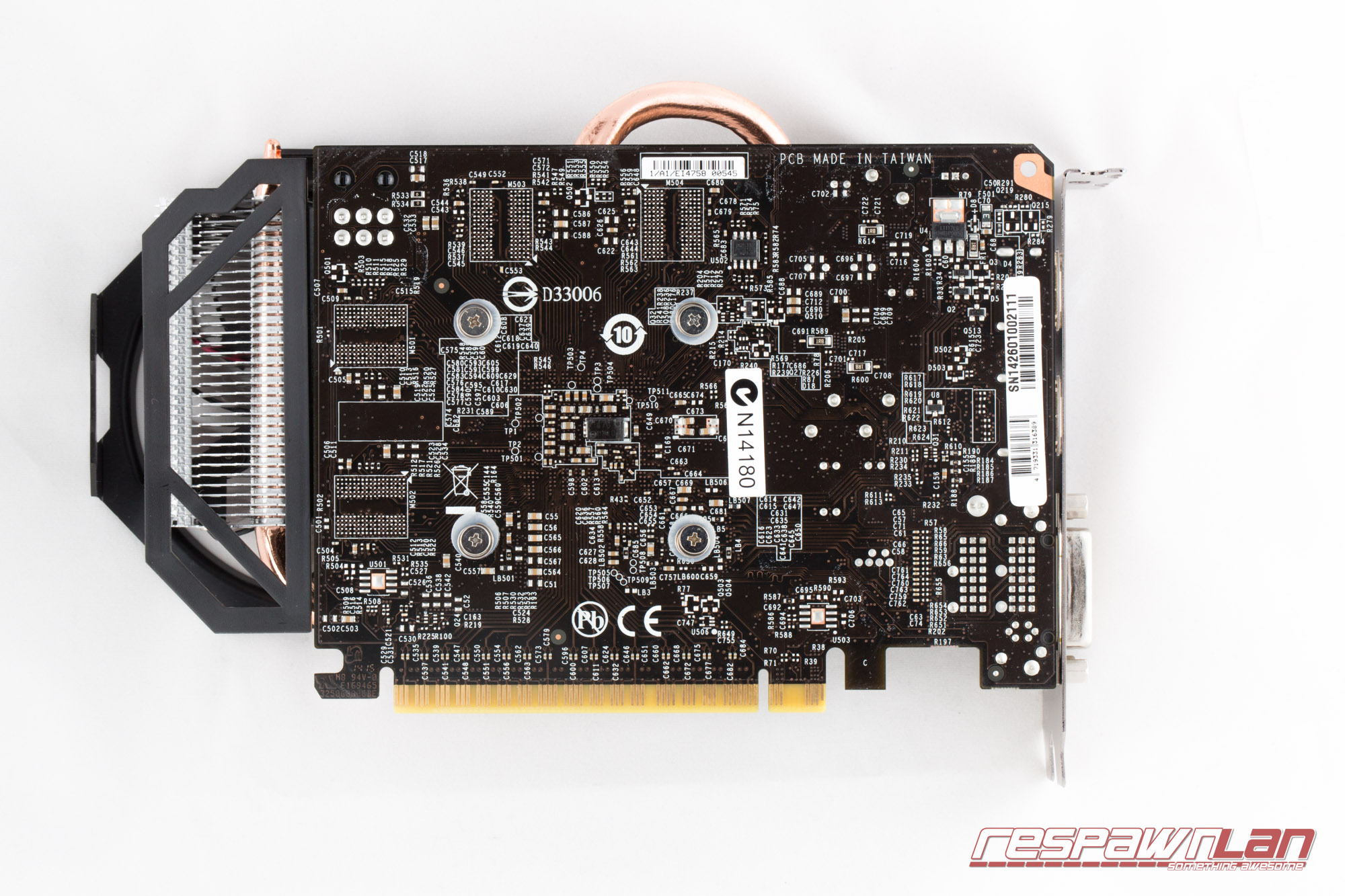

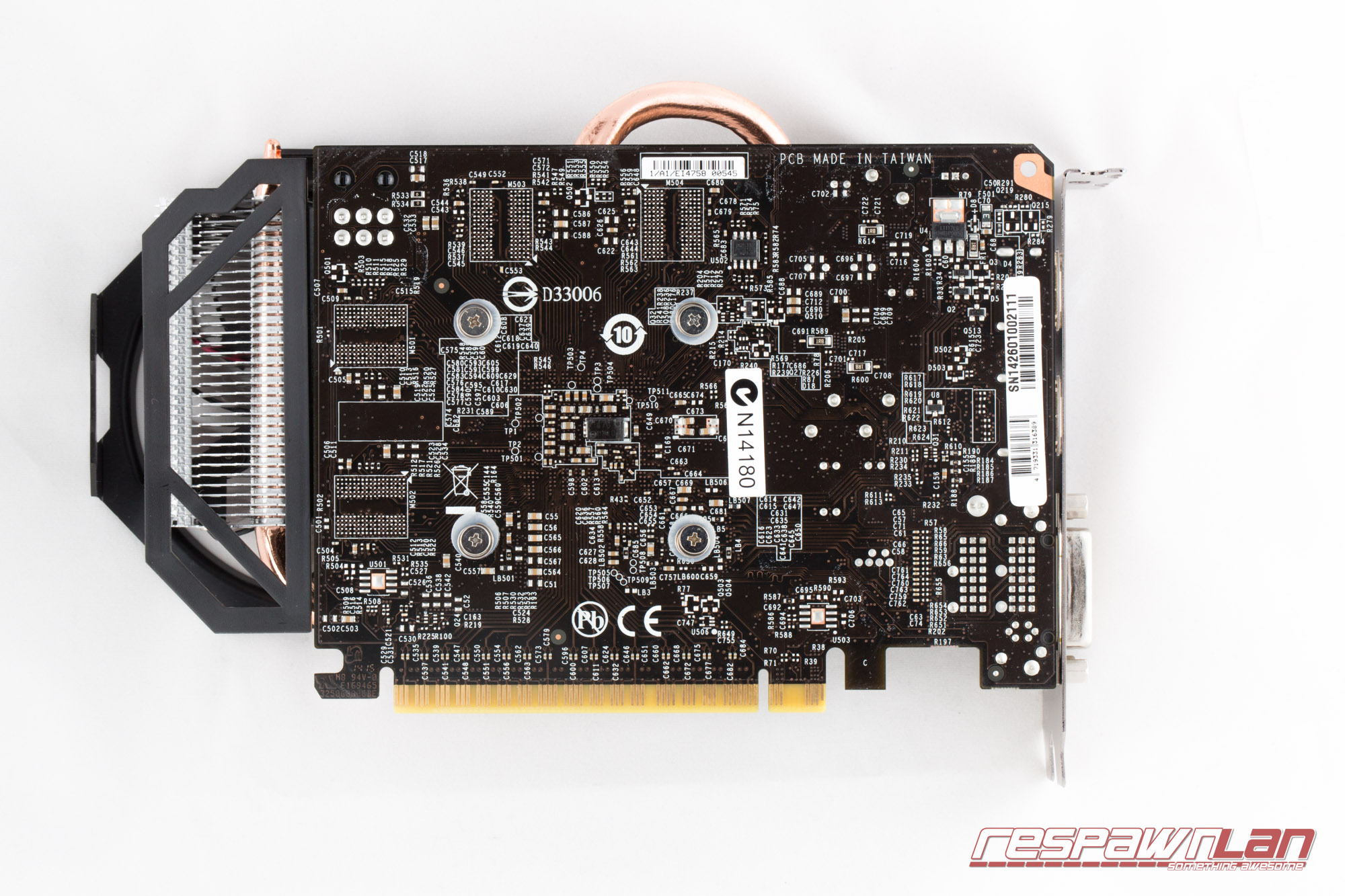

The card is small in size, making it a straightforward installation for most PC cases including those with limited space. The build of the card feels solid. A single 6 pin power connector makes it easier for cable management.

Installation was a breeze. Coming from an existing NVIDIA card, all that was required was to open up the GeForce Experience, hit update to ensure we had the latest drivers, shut down the system, swap GPUs, turn it on again and be up and running. Updating GPU drivers within the GeForce Experience app doesnít require a reboot, either.

Power usage

:

According to Corsair Link, during idle, we saw around 20W less idle usage than the GTX 670 with a total system usage of 212W. With the Firestorm benchmark running, we saw the power usage peak at 276W which is a 64W increase at full speed. With the benchmark running, we saw the temperature hit a top of 46c in a relatively poorly loaded case. The fan was running at around 1900 RPM and was still relatively quiet in the case. This makes this card a good choice for small systems with limited airflow or where low noise requirements are paramount.

For comparison a Radeon R9 280X brings the system idle usage to 235W. Keep in mind this is when the system is at the Windows desktop, so itís not doing much at this stage.

Test Systems

Weíre using two test systems to generate some benchmark results which are hopefully meaningful for users with this GPU. Our first system for testing is the well known monolith, housed in a Lian Li V2000B equipped with the following:

We gave this GPU a spin in two systems. One was as follows:

- Gigabyte Z77X-UD4-TH motherboard

- Intel 3770k at stock

- 32GB PC3-1600R G-Skill memory (4x8GB)

- Western Digital 1TB VelociRaptor 10KRPM hard drive

- Corsair AX1200i power supply

- 3ware 9650SE-24M8 RAID controller

- ASUS Xonar Essence STX sound card

- Dell 3007WFP-HC display (dual-link DVI)

- Windows 8.1

The second system is a Mac. Of note, the 750Ti GPU will not boot to the EFI loader as it doesnít contain Mac compatible uEFI code which is par for the course so youíre flying blind until the OS starts booting the good news is bootcamp BIOS will boot Windows 8.1 with full interactivity and OSX 10.9.4 has native support for this GPU. The good news is that the 68W TDP is natively supposed by the Mac Pro - other GPUs such as the R9 280X require far more power than the system can supply. The motherboard on this system, a costly dual slot backplane, contains the power outlets for two 6 pin PCI-E connectors - capable of supplying two GPUs each with 75W to supplement the 75W from the motherboardís PCI-E connector - or a single GPU with an additional 150W. This brings the power total up to 225W, which in the case of the ATI, isnít a good idea. Overloading these outlets can cause damage to the motherboard and the power supply will shut off after a few minutes.

The system originally shipped with a Radeon 5770 - a slouch by todayís standards but we wanted to see what would be gained by the upgrade. Many people have an older generation gaming PC and would like to know what to do in order to bring it up to spec to bring to LAN events and play more modern titles such as CS:GO so we wanted to see if we could give this aging Mac a new lease of life. The 5770 had a 108W TDP and a noisier fan at high load.

- Apple Mac Pro 2009

- 2x Xeon E5520 2.26GHz quad core CPUs

- 8GB DDR3 PC10666 ECC memory

- Samsung Evo 840 Pro 256GB SSD

- HP LP2475w (DVI input)

- Windows 8.1

GPUs tested:

- Gigabyte GTX670

- ATI Radeon 5770 (Apple OEM suppled in Mac Pro)

- MSI Radeon R9 280X Gaming

- Gigabyte 750Ti Black Edition

Benchmarks and test results

Weíve only run a few tests on 3DMark so far to give us a rough idea as to how this product stands. Compared to a GTX 670 and a Radeon R9 280X,

While running these benchmarks, we found that the fans on the Gigabyte Black Edition 750Ti were practically silent. Even at a slight speed increase, they were absolutely inaudible over ambient system noise. Even with oneís ear right up to the GPU, there was no sign of any uncomfortable noises, rattling or jet engine sounds coming from the GPUís fan assembly. Every other card we tested took upon itself to attempt to take off on Runway 34L bound for a pair of noise cancelling headphones.

3DMark 2014

For 3DMark, we ran a series of tests on both systems. These are artificial benchmarks. Of note, the 750Ti GPU wasnít verified by 3DMark at time of testing.

We ran the benchmarks in both the Mac Pro and the monolith to see how CPU bound the benchmarks were - guess what - the scores were almost identical between both CPUs. The GPU swaps, however, told a completely different story.

Cloud Gate on Mac Pro:

Gigabyte GeForce GTX 750Ti Black Edition, E5520: 18779

Gigabyte GeForce GTX 750Ti Black Edition, 3770K: 18089

Gigabyte GeForce GTX 670, 3770K 20503

ATI Radeon R9 280X, 3770K: 21221

ATI Radeon 5770: 12612

Skydiver

Gigabyte GeForce GTX 750Ti Black Edition, E5520: 13740

Gigabyte GeForce GTX 750Ti Black Edition, 3770K: 14037

ATI Radeon 5770, E5520: 6694

Gigabyte GeForce GTX 670, 3770K: 18407

ATI Radeon R9 280X, 3770K: 20230

Fire Strike

Gigabyte GeForce GTX 750Ti Black Edition, E5520: 4356

Gigabyte GeForce GTX 750Ti Black Edition, 3770K: 4374

ATI Radeon 5770, E5520: 1969

Gigabyte GeForce GTX 670, 3770K: 6290

ATI Radeon R9 280X, 3770K: 7350

Fire Strike Extreme

Gigabyte GeForce GTX 750Ti Black Edition, 3770K: 2163

Ice Storm

Gigabyte GeForce GTX 750Ti Black Edition, E5520: 95403

Gigabyte GeForce GTX 750Ti Black Edition, 3770K: 118634

ATI Radeon 5770, E5520: 89276

Gigabyte GeForce GTX 670, 3770K: 134882

ATI Radeon R9 280X, 3770K: 135639

3DMark 2011

3DMark 11 was run on the Mac Pro for some testing between the 5770 - a five year old GPU and the 750Ti. Settings were set to Extreme. When the 5770 was shipping, the GPU cost around $400. We saw X904 for the 5770 and X1981 for the 750Ti, over 2x the performance. For old systems such as this, thereís a clean-cut benefit of popping a new GPU in, without the hassle and worry of needing to upgrade the PSU.

Games

For these tests, the monolith PC (3770K) was used with the GeForce GTX 750Ti card and FRAPS to record the benchmarking. FRAPS is a tool which can run in benchmark mode and record the frame rate. If youíd like to see how our tests stack up to your system, grab a copy of the free FRAPS tool to compare:

CS:Global Offensive, 1920x1080, all maximum settings (8x MSAA, 16x Anisotropic), trilinear: Average: 182, Minimum: 130, Maximum: 281

CS:Global Offensive: 2560x1600, all maximum settings: Average: 113, Minimum: 57, Maximum: 291

FlatOut2, 2560x1600, maximum settings: Average: 87, Minimum: 69, Maximum 105

Team Fortress 2: 1920x1080: Average: 204, Minimum: 111, Maximum: 300

Team Fortress 2: 2560x1600: Average: 123, Minimum 84, Maximum:300

Mining

We alluded to litecoin mining before. The great news for you (and NVIDIA) is that the 750ti is a decent performer for scrypt based cryptocurrencies, coming in at around 290Khash/sec whilst staining within its power limit. Going to the 250W-consuming R9 280X delivering 750khash/sec on a good day, we see an immediate benefit here. If NVIDIA had shipped this technology in a ~$450 GeForce product delivering double the performance with double the power consumption as the 750ti, these things would have flown out the door.

On a performance per watt basis: the R9 280X runs at about 3 kash/watt, the GeForce delivers 4.46kash/watt.

The GeForce Experience

Unfortunately with 331.82 installed, the plan was to simply swap to the 750Ti and be good to go with the new drivers. Installing the GeForce Experience tool, the tool claimed that even after checking for updates, that the R331 (331.82) driver was the latest available. Cruising to the NVIDIA website, 337.50 was showing as the latest WHQL release and beta driver as 340.43for both the 750 Ti and the 670. So some work is needed there. Installing a new version of the drivers from the NVIDIA website proved far more fruitful - and the new drivers picked up the 340.43 WHQL driver which was released during our testing and updated flawlessly.

The user interface is slick, allowing for many customisations. Weíd like to see some settings absent or at least obvious that theyíre only supported on some hardware, such as the LED Visualizer, which allows you to open the applet only to be disappointed that itís not supported.

Conclusion

This is not your top of the line graphics card. Thatís alright, because itís not supposed to be. The benchmarks have come through reasonably - this is a GPU weíd recommend for those whom wish to spend under $250 on a GPU and walk away with a performer that should play a great deal of games at very playable framerates. The low power draw will be a great drawcard for many as it easily integrated into existing systems.

We will have this GPU on display at Respawn LAN v32 - if youíre coming to the LAN, please check the card out and feel free to ask any questions.

The Gigabyte Black Edition 750Ti GPU is suitable for:

- Medium spec gaming PCs

- Home Theatre PCs or systems where quiet cooling is required even when running the GPU at high load.

- SLI 2x in a Mac Pro 2009-2012 model for incredible performance

- Upgrading older graphics in 4+ year old PCs

- Systems with low power PSUs (350W+)

- Powering a 4k display through dual HDMI

- Overclocking due to the support for external power source allowing power boost.

- GPU-based scrypt coin mining - while ASIC mining is more efficient, this is the most efficient performance per watt GPU on the market today.

- Playing most mid-range PC games such as Counter-Strike Source, DOTA 2, Team Fortress 2, Flatout 2 and so on.

It is not suitable for:

- Providing an alternative heat source for cold winter nights

- Fans that sound like a jet engine for enhanced audible realism while playing flight simulators or mining for dogecoin, much noise, very whirrrr.

- High performance gaming. Itís not a high end card, but weíre looking forward to the next NVIDIA product on the Maxwell architecture.

- NVIDIA NSYNC or DisplayPort connectivity - sadly, this GPU doesn't support DisplayPort output.

Zardoz Gigabyte GeForce GTX 750Ti Review Aug 10 2014, 07:49 PM

Zardoz Gigabyte GeForce GTX 750Ti Review Aug 10 2014, 07:49 PM

Lysdexia Looks hot, but why would anyone buy this specific ... Aug 21 2014, 09:30 PM

Lysdexia Looks hot, but why would anyone buy this specific ... Aug 21 2014, 09:30 PM

Aug 10 2014, 07:49 PM

Aug 10 2014, 07:49 PM